Community Qualified Configurations

Community Qualified Configurations

Dell’s Cert-CSI is a tool to validate Dell CSI Drivers. It contains various test suites to validate the drivers and addresses the complexity involved with certifiying Dell CSI drivers in different customer environments.

Any orchestrator platform, operating system or version that is not mentioned in the support matrix but self-certified by the customer using cert-csi is supported for customer use.

You may qualify your environment for Dell CSI Drivers by executing the Run All Test Suites workflow.

Please submit your test results for our review here. If the results are a success, the orchestrator platform and version will be published under Community Qualified Configurations.

There are three methods of installing cert-csi.

The executable from the GitHub Release only supports Linux. For non-Linux users, you must build the

cert-csiexecutable locally.

NOTE: Please ensure you delete any previously downloaded Cert-CSI binaries, as each release uses the same name (

cert-csi). After installing the latest version, run thecert-csi -vcommand to verify the installed version.

Download cert-csi from here

Set the execute permission before running it.

chmod +x ./cert-csi

sudo install -o root -g root -m 0755 cert-csi /usr/local/bin/cert-csi

If you do not have root access on the target system, you can still install cert-csi to the ~/.local/bin directory:

chmod +x ./cert-csi

mkdir -p ~/.local/bin

mv ./cert-csi ~/.local/bin/cert-csi

# and then append (or prepend) ~/.local/bin to $PATH

docker pull quay.io/dell/container-storage-modules/cert-csi:v1.7.0

podman pull quay.io/dell/container-storage-modules/cert-csi:v1.7.0

git clone -b "v1.7.0" https://github.com/dell/cert-csi.git && cd cert-csi

make build # the cert-csi executable will be in the working directory

chmod +x ./cert-csi # if building on *nix machine # uses podman if available, otherwise uses docker. The resulting image is tagged cert-csi:latest

make dockerIf you want to collect csi-driver resource usage metrics, then please provide the namespace where it can be found and install the metric-server using this command (kubectl is required):

make install-ms

cert-csi --help docker run --rm -it -v ~/.kube/config:/root/.kube/config quay.io/dell/container-storage-modules/cert-csi:v1.7.0 --help podman run --rm -it -v ~/.kube/config:/root/.kube/config quay.io/dell/container-storage-modules/cert-csi:v1.7.0 --helpThe following sections showing how to execute the various test suites use the executable for brevity. For executions requiring special behavior, such as mounting file arguments into the container image, it will be noted for the relevant command.

Log files are located in the

logsdirectory in the working directory of cert-csi.

Report files are located in the default$HOME/.cert-csi/reportsdirectory.

Database (SQLite) file for test suites is<storage-class-name>.dbin the working directory of cert-csi.

Database (SQLite) file for functional test suites iscert-csi-functional.dbin the working directory of cert-csi.

NOTE: If using the container image, these files will be inside the container. If you are interested in these files, it is recommended to use the executable.

You can use cert-csi to launch a test run against multiple storage classes to check if the driver adheres to advertised capabilities.

To run the test suites you need to provide .yaml config with storage classes and their capabilities. You can use example-certify-config.yaml as an example.

Template:

storageClasses:

- name: # storage-class-name (ex. powerstore)

minSize: # minimal size for your sc (ex. 1Gi)

rawBlock: # is Raw Block supported (true or false)

expansion: # is volume expansion supported (true or false)

clone: # is volume cloning supported (true or false)

snapshot: # is volume snapshotting supported (true or false)

RWX: # is ReadWriteMany volume access mode supported for non RawBlock volumes (true or false)

volumeHealth: # set this to enable the execution of the VolumeHealthMetricsSuite (true or false)

# Make sure to enable healthMonitor for the driver's controller and node pods before running this suite. It is recommended to use a smaller interval time for this sidecar and pass the required arguments.

VGS: # set this to enable the execution of the VolumeGroupSnapSuite (true or false)

# Additionally, make sure to provide the necessary required arguments such as volumeSnapshotClass, vgs-volume-label, and any others as needed.

RWOP: # set this to enable the execution of the MultiAttachSuite with the AccessMode set to ReadWriteOncePod (true or false)

ephemeral: # if exists, then run EphemeralVolumeSuite. See the Ephemeral Volumes suite section for example Volume Attributes

driver: # driver name for EphemeralVolumeSuite (e.g., csi-vxflexos.dellemc.com)

fstype: # fstype for EphemeralVolumeSuite

volumeAttributes: # volume attrs for EphemeralVolumeSuite.

attr1: # volume attr for EphemeralVolumeSuite

attr2: # volume attr for EphemeralVolumeSuite

capacityTracking: # capacityTracking test requires the storage class to have volume binding mode as 'WaitForFirstConsumer'

driverNamespace: # namespace where driver is installed

pollInterval: # duration to poll capacity (e.g., 2m)

Driver specific examples:

storageClasses:

- name: vxflexos

minSize: 8Gi

rawBlock: true

expansion: true

clone: true

snapshot: true

RWX: false

ephemeral:

driver: csi-vxflexos.dellemc.com

fstype: ext4

volumeAttributes:

volumeName: "my-ephemeral-vol"

size: "8Gi"

storagepool: "sample"

systemID: "sample"

- name: vxflexos-nfs

minSize: 8Gi

rawBlock: false

expansion: true

clone: true

snapshot: true

RWX: true

RWOP: false

ephemeral:

driver: csi-vxflexos.dellemc.com

fstype: "nfs"

volumeAttributes:

volumeName: "my-ephemeral-vol"

size: "8Gi"

storagepool: "sample"

systemID: "sample"

capacityTracking:

driverNamespace: vxflexos

pollInterval: 2m

storageClasses:

- name: isilon

minSize: 8Gi

rawBlock: false

expansion: true

clone: true

snapshot: true

RWX: true

ephemeral:

driver: csi-isilon.dellemc.com

fstype: nfs

volumeAttributes:

size: "10Gi"

ClusterName: "sample"

AccessZone: "sample"

IsiPath: "/ifs/data/sample"

IsiVolumePathPermissions: "0777"

AzServiceIP: "192.168.2.1"

capacityTracking:

driverNamespace: isilon

pollInterval: 2m

storageClasses:

- name: powermax-iscsi

minSize: 5Gi

rawBlock: true

expansion: true

clone: true

snapshot: true

capacityTracking:

driverNamespace: powermax

pollInterval: 2m

- name: powermax-nfs

minSize: 5Gi

rawBlock: false

expansion: true

clone: false

snapshot: false

RWX: true

RWOP: false

capacityTracking:

driverNamespace: powermax

pollInterval: 2m

storageClasses:

- name: powerstore

minSize: 5Gi

rawBlock: true

expansion: true

clone: true

snapshot: true

RWX: false

ephemeral:

driver: csi-powerstore.dellemc.com

fstype: ext4

volumeAttributes:

arrayID: "arrayid"

protocol: iSCSI

size: 5Gi

- name: powerstore-nfs

minSize: 5Gi

rawBlock: false

expansion: true

clone: true

snapshot: true

RWX: true

RWOP: false

ephemeral:

driver: csi-powerstore.dellemc.com

fstype: "nfs"

volumeAttributes:

arrayID: "arrayid"

protocol: NFS

size: 5Gi

nasName: "nas-server"

capacityTracking:

driverNamespace: powerstore

pollInterval: 2m

storageClasses:

- name: unity-iscsi

minSize: 3Gi

rawBlock: true

expansion: true

clone: true

snapshot: true

RWX: false

ephemeral:

driver: csi-unity.dellemc.com

fstype: ext4

volumeAttributes:

arrayId: "array-id"

storagePool: pool-name

protocol: iSCSI

size: 5Gi

- name: unity-nfs

minSize: 3Gi

rawBlock: false

expansion: true

clone: true

snapshot: true

RWX: true

RWOP: false

ephemeral:

driver: csi-unity.dellemc.com

fstype: "nfs"

volumeAttributes:

arrayId: "array-id"

storagePool: pool-name

protocol: NFS

size: 5Gi

nasServer: "nas-server"

nasName: "nas-name"

capacityTracking:

driverNamespace: unity

pollInterval: 2m

storageClasses.clone is true, executes the Volume Cloning suite.storageClasses.expansion is true, executes the Volume Expansion suite.storageClasses.expansion is true and storageClasses.rawBlock is true, executes the Volume Expansion suite with raw block volumes.storageClasses.snapshot is true, executes the Snapshot suite and the Replication suite.storageClasses.rawBlock is true, executes the Multi-Attach Volume suite with raw block volumes.storageClasses.rwx is true, executes the Multi-Attach Volume suite. (Storgae Class must be NFS.)storageClasses.volumeHealth is true, executes the Volume Health Metrics suite.storageClasses.rwop is true, executes the Multi-Attach Volume suite with the volume access mode ReadWriteOncePod.storageClasses.ephemeral exists, executes the Ephemeral Volumes suite.storageClasses.vgs is true, executes the Volume Group Snapshot suite.storageClasses.capacityTracking exists, executes the Storage Class Capacity Tracking suite.NOTE: For testing/debugging purposes, it can be useful to use the

--no-cleanupso resources do not get deleted.

NOTE: If you are using CSI PowerScale with SmartQuotas disabled, the

Volume Expansionsuite is expected to timeout due to the way PowerScale provisions storage. SetstorageClasses.expansiontofalseto skip this suite.

cert-csi certify --cert-config <path-to-config> --vsc <volume-snapshot-class>

Withhold the --vsc argument if Snapshot capabilities are disabled.

cert-csi certify --cert-config <path-to-config>

Optional Params:

--vsc: volume snapshot class, required if you specified snapshot capability

Run cert-csi certify -h for more options.

If you are using the container image, the cert-config file must be mounted into the container. Assuming your cert-config file is /home/user/example-certify-config.yaml, here are examples of how to execute this suite with the container image.

docker run --rm -it -v ~/.kube/config:/root/.kube/config -v /home/user/example-certify-config.yaml:/example-certify-config.yaml quay.io/dell/container-storage-modules/cert-csi:v1.7.0 certify --cert-config /example-certify-config.yaml --vsc <volume-snapshot-class> podman run --rm -it -v ~/.kube/config:/root/.kube/config -v /home/user/example-certify-config.yaml:/example-certify-config.yaml quay.io/dell/container-storage-modules/cert-csi:v1.7.0 certify --cert-config /example-certify-config.yaml --vsc <volume-snapshot-class>NOTE: For testing/debugging purposes, it can useful to use the

--no-cleanupflag so resources do not get deleted.

volumeio-test-* where resources will be created.WaitForFirstConsumer, waits for Persistent Volume Claims to be bound to Persistent Volumes.cert-csi test vio --sc <storage class>

Run cert-csi test vio -h for more options.

scale-test-* where resources will be created.cert-csi test scaling --sc <storage class>

Run cert-csi test scaling -h for more options.

snap-test-* where resources will be created.WaitForFirstConsumer, waits for Persistent Volume Claim to be bound to Persistent Volumes.cert-csi test snap --sc <storage class> --vsc <volume snapshot class>

Run cert-csi test snap -h for more options.

vgs-snap-test-* where resources will be created.WaitForFirstConsumer, waits for Persistent Volume Claim to be bound to Persistent Volumes.Note: Volume Group Snapshots are only supported by CSI PowerFlex and CSI PowerStore.

mas-test-* where resources will be created.cert-csi test multi-attach-vol --sc <storage class>

The storage class must be an NFS storage class. Otherwise, raw block volumes must be used.

cert-csi test multi-attach-vol --sc <storage class> --block

Run cert-csi test multi-attach-vol -h for more options.

replication-suite-* where resources will be created.cert-csi test replication --sc <storage class> --vsc <snapshot class>

Run cert-csi test replication -h for more options.

clonevolume-suite-* where resources will be created.cert-csi test clone-volume --sc <storage class>

Run cert-csi test clone-volume -h for more options.

volume-expansion-suite-* where resources will be created.Raw block volumes cannot be verified since there is no filesystem.

If you are using CSI PowerScale with SmartQuotas disabled, the

Volume Expansionsuite is expected to timeout due to the way PowerScale provisions storage.

cert-csi test expansion --sc <storage class>

Run cert-csi test expansion -h for more options.

block-snap-test-* where resources will be created.WaitForFirstConsumer, waits for Persistent Volume Claim to be bound to Persistent Volumes.cert-csi test blocksnap --sc <storageClass> --vsc <snapshotclass>

Run cert-csi test blocksnap -h for more options.

volume-health-metrics-* where resources will be created.cert-csi test volumehealthmetrics --sc <storage-class> --driver-ns <driver-namespace>

Run cert-csi test volumehealthmetrics -h for more options.

Note: Make sure to enable healthMonitor for the driver’s controller and node pods before running this suite. It is recommended to use a smaller interval time for this sidecar.

functional-test where resources will be created.cert-csi test ephemeral-volume --driver <driver-name> --attr ephemeral-config.properties

Run cert-csi test ephemeral-volume -h for more options.

--driveris the name of a CSI Driver from the output ofkubectl get csidriver(e.g, csi-vxflexos.dellemc.com). This suite does not delete resources on success.

If you are using the container image, the attr file must be mounted into the container. Assuming your attr file is /home/user/ephemeral-config.properties, here are examples of how to execute this suite with the container image.

docker run --rm -it -v ~/.kube/config:/root/.kube/config -v /home/user/ephemeral-config.properties:/ephemeral-config.properties quay.io/dell/container-storage-modules/cert-csi:v1.7.0 test ephemeral-volume --driver <driver-name> --attr /ephemeral-config.properties podman run --rm -it -v ~/.kube/config:/root/.kube/config -v /home/user/ephemeral-config.properties:/ephemeral-config.properties quay.io/dell/container-storage-modules/cert-csi:v1.7.0 test ephemeral-volume --driver <driver-name> --attr /ephemeral-config.propertiesSample ephemeral-config.properties (key/value pair)

volumeName=my-ephemeral-vol

size=10Gi

storagepool=sample

systemID=sample

size=10Gi

ClusterName=sample

AccessZone=sample

IsiPath=/ifs/data/sample

IsiVolumePathPermissions=0777

AzServiceIP=192.168.2.1

size=10Gi

arrayID=sample

nasName=sample

nfsAcls=0777

size=10Gi

arrayId=sample

protocol=iSCSI

thinProvisioned=true

isDataReductionEnabled=false

tieringPolicy=1

storagePool=pool_2

nasName=sample

functional-test where resources will be created.capacity-tracking.Storage class must use volume binding mode

WaitForFirstConsumer.

This suite does not delete resources on success.

cert-csi functional-test capacity-tracking --sc <storage-class> --drns <driver-namespace>

Run cert-csi test capacity-tracking -h for more options.

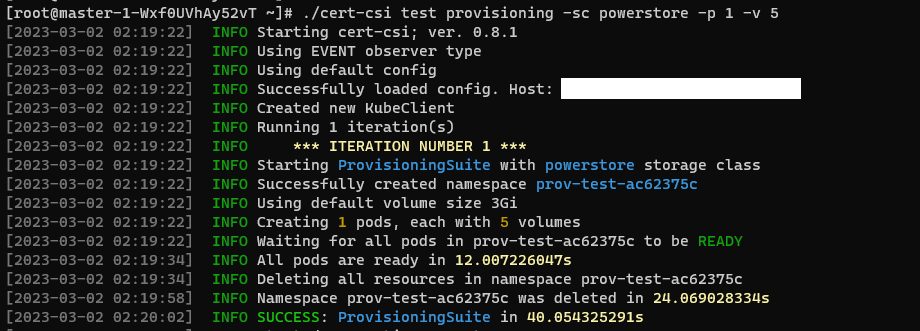

cert-csi test <suite-name> --sc <storage class> --longevity <number of iterations>

To use custom images for creating containers pass an image config YAML file as an argument. The YAML file should have linux(test) and postgres images name with their corresponding image URL. For example

Example:

images:

- test: "docker.io/centos:centos7" # change this to your url

postgres: "docker.io/bitnami/postgresql:11.8.0-debian-10-r72" # change this to your url

To use this feature, run cert-csi with the option --image-config /path/to/config.yaml along with any other arguments.

All Kubernetes end to end tests require that you provide the driver config based on the storage class you want to test and the version of the kubernetes you want to test against. These are the mandatory parameters that you can provide in command like..

--driver-config <path of driver config file> and --version "v1.25.0"

To run kubernetes end-to-end tests, run the command:

cert-csi k8s-e2e --config <kube config> --driver-config <path to driver config> --focus <regx pattern to focus Ex: "External.Storage.*" > --timeout <timeout Ex: "2h"> --version < version of k8s Ex: "v1.25.0"> --skip-tests <skip these steps mentioned in file> --skip <regx pattern to skip tests Ex:"Generic Ephemeral-volume|(block volmode)">

$HOME/reports directory by default if user doesn’t mention the report path.$HOME/reports/execution_[storage class name].loginfo.log , error.log

cert-csi k8s-e2e --config "/root/.kube/config" --driver-config "/root/e2e_config/config-nfs.yaml" --focus "External.Storage.*" --timeout "2h" --version "v1.25.0" --skip-tests "/root/e2e_config/ignore.yaml"

./cert-csi k8s-e2e --config "/root/.kube/config" --driver-config "/root/e2e_config/config-iscsi.yaml" --focus "External.Storage.*" --timeout "2h" --version "v1.25.0" --focus-file "capacity.go"

To generate test report from the database, run the command:

cert-csi --db <path/to/.db> report --testrun <test-run-name> --html --txt

Report types:

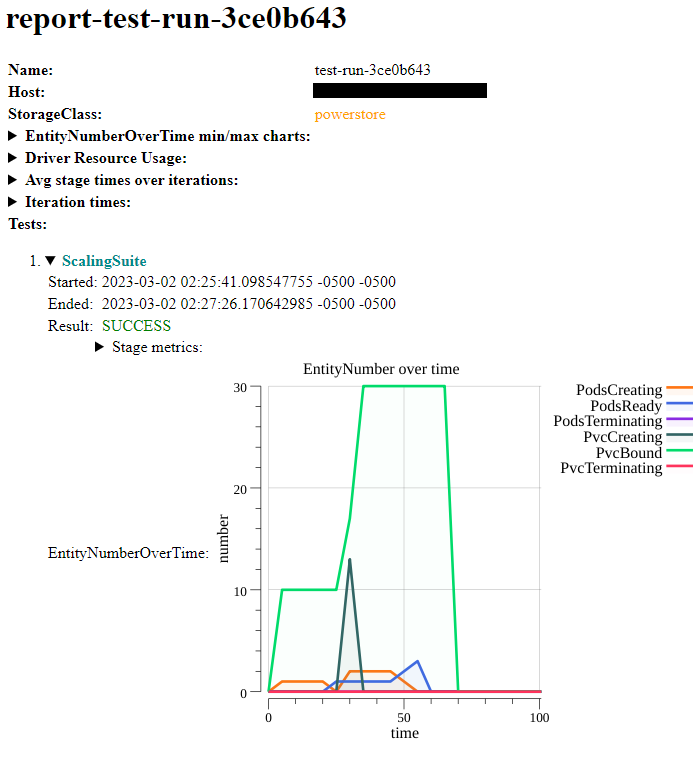

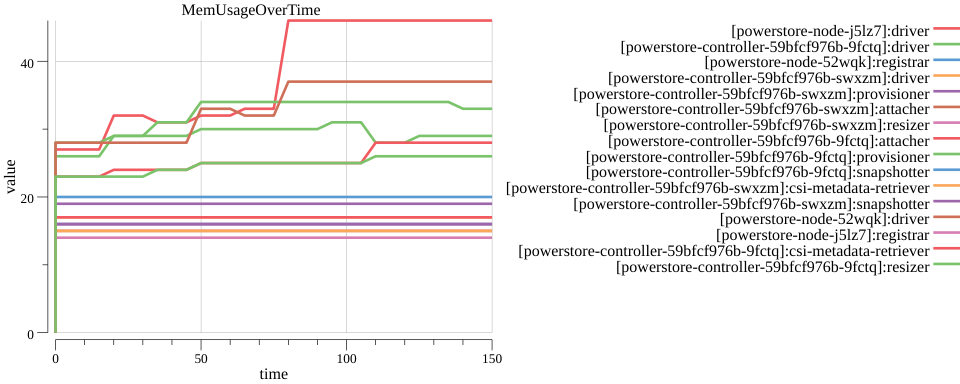

--html: performance html report

--txt: performance txt report

--xml: junit compatible xml report, contains basic run information

--tabular: tidy html report with basic run information

To generate tabular report from the database, run the command:

cert-csi -db ./cert-csi-functional.db functional-report -tabular

To generate XML report from the database, run the command:

cert-csi -db ./cert-csi-functional.db functional-report -xml

To specify test report folder path, use –path option as follows:

cert-csi --db <path/to/.db> report --testrun <test-run-name> --path <path-to-folder>

Options:

--path: path to folder where reports will be created (if not specified ~/.cert-csi/ will be used)

To generate report from multiple databases, run the command:

cert-csi report --tr <db-path>:<test-run-name> --tr ... --tabular --xml

Supported report types:

--xml

--tabular

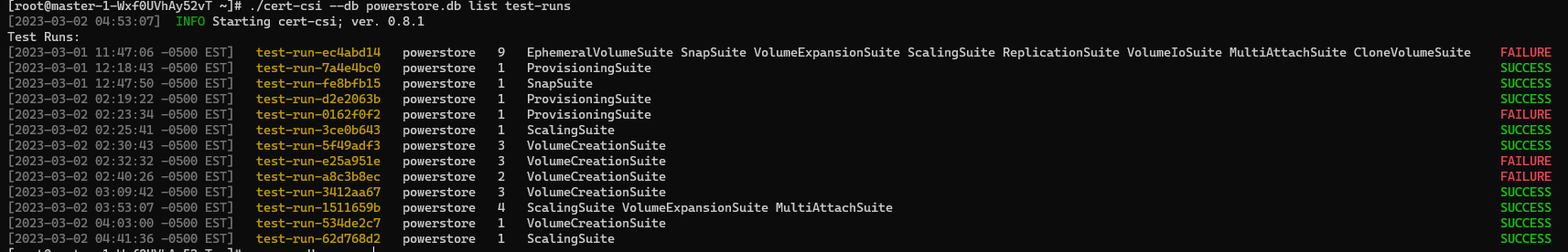

To list all test runs, run the command:

cert-csi --db <path/to/.db> list test-runs

To specify test report folder path, use –path option as follows:

cert-csi <command> --path <path-to-folder>

Commands:

test <any-subcommand>

certify

report

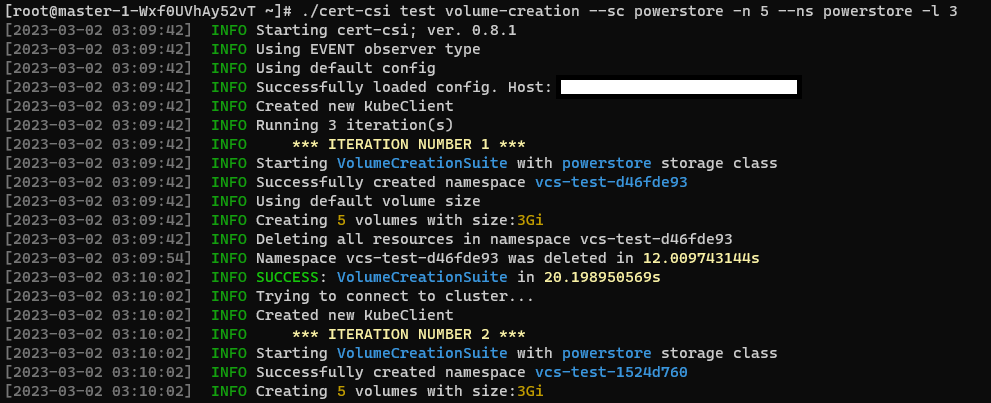

To run tests with driver resource usage metrics enabled, run the command:

cert-csi test <test suite> --sc <storage class> <...> --ns <driver namespace>

To run tests with custom hooks, run the command:

cert-csi test <test suite> --sc <storage class> <...> --sh ./hooks/start.sh --rh ./hooks/ready.sh --fh ./hooks/finish.sh

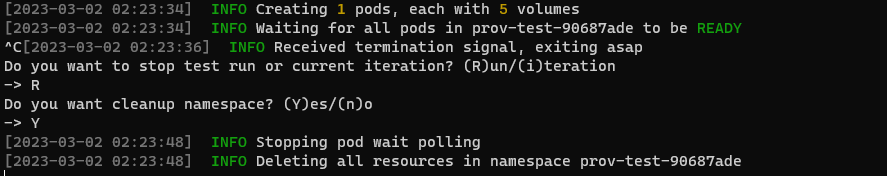

You can interrupt the application by sending an interruption signal (for example pressing Ctrl + C). It will stop polling and try to cleanup resources.

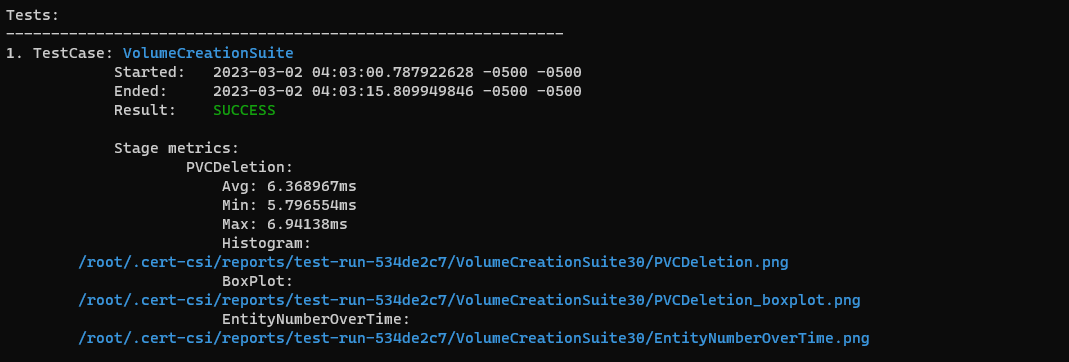

Text report example

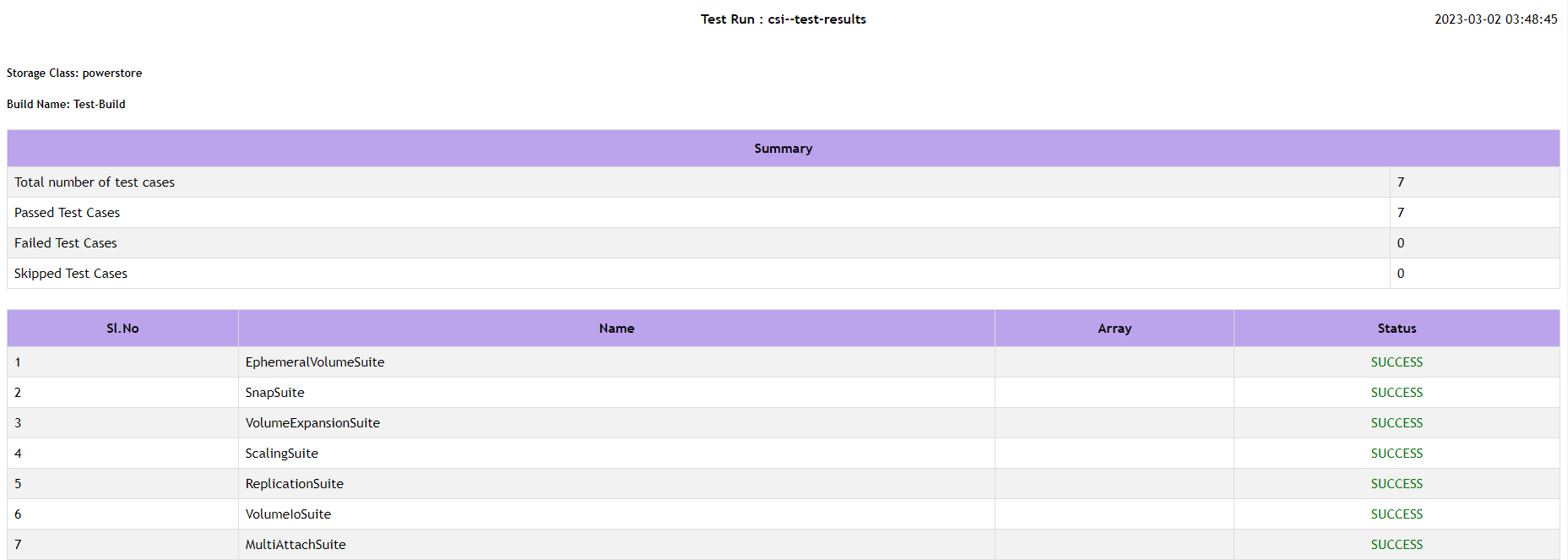

Tabular Report example

Community Qualified Configurations